Upstream Devlog

Collaboration with GPT-3

I have been working with GPT-3 for a semester so far, my interest for large natural language processing stems from my fascination for the existential implications for both humans and machines it has since brought on. The decision on working with this certain model is a result for my previous explorations on two domains which I will elaborate on the following section.

To provide additional layers of context, I'd like to include a reference to the final prototype I did for my major studio 1. Where I situated an image classification machine learning system (ML5 running on p5.js) in front its "psychic" → (A realtime visual representing different weights of the models output.)

The idea of a feedback loop later became a major motif for my explorations with decision making agents. I later became obsessed with the idea of "text" as the shape of identities. The idea of text being a low level tool for both human and machines to transpile and store informations, creating layers of metaphors and thus again creating the action of multiple feedback loops.

*The major inspiration for my second domain.*

A combination of these two domains led me to the exciting world of NLPs, of which one could argue is the most "affective" sections of current machine learning progression that can be appreciated by public audiences.

The progress and prototypes for this assignment served as a short detour for my thesis exploration. Jumping to the post mortem for a bit, after the really helpful feedbacks from my crit session on Wednesday, I now can set up my progress in a more confined and hopefully clearer context.

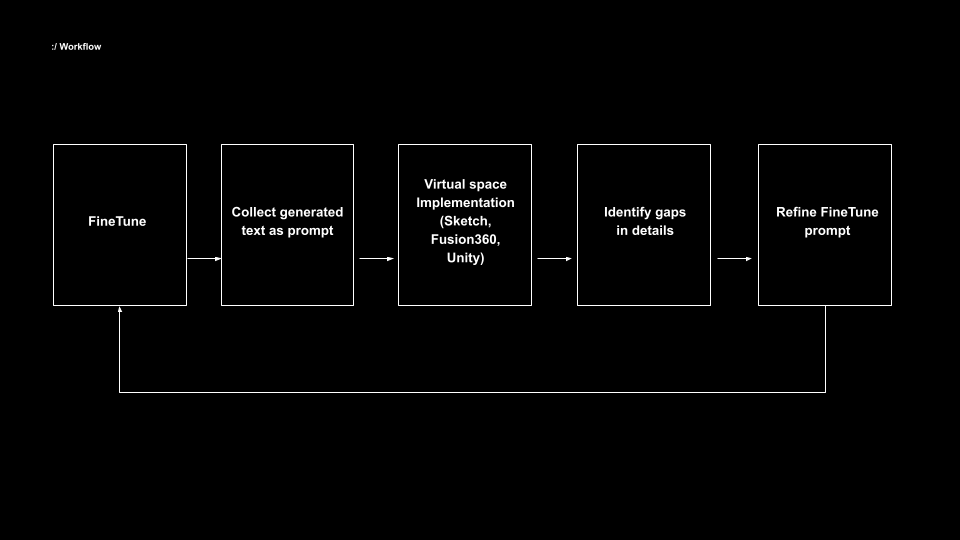

A general view of my workflow

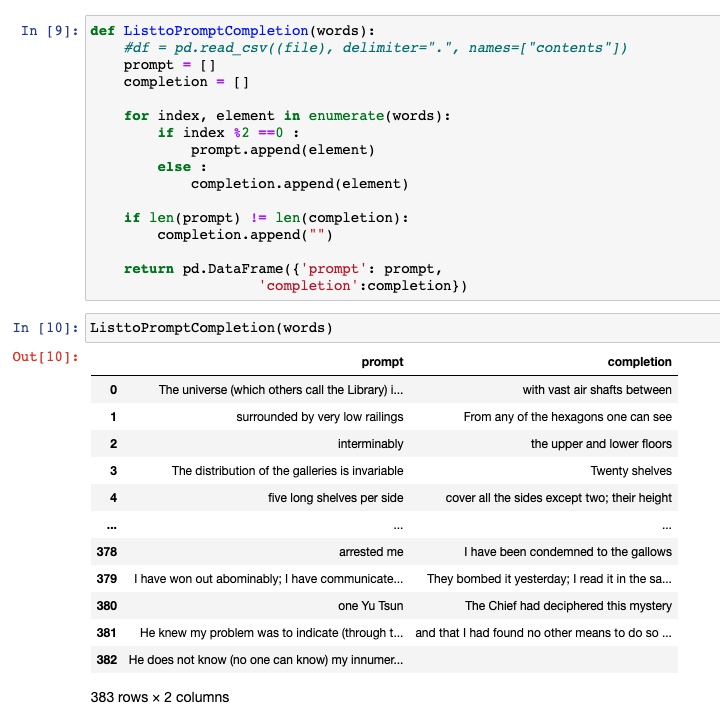

Fine-tune material:

I have chosen two of Jorge Luis Borges' books (Library of babel and the garden of forking paths) as my fine tuning material. Contextually representing the fluidity of languages in the form of text and hopefully to harness Borges' talent of render spaces with texts.

*Splitting sentences by commas and periods, then index them sequentially in to prompt and completion.*

In general, I am looking at two sides of my interaction with GPT-3 → To prompt the model through fine tuning to generate informations about space. The first part is the way I would be approaching the prompt, and the second part would be my rendering of it's outcomes (text for now.)

Approaching the prompt:

Drawing inspirations from Anna Garbier's Exercises in Meta-cohesion, my interactions are set with the goal of receiving descriptions of space in mind. I started with large scaled human-made prompt (written by me), upon receiving enough coherent information I will update my prompt to try and get a more detailed description. For example: If I gave the prompt of "Walking towards the middle" as the first prompt (The "The middle" would likely to situate the model in a contextual setting of a space, the verb "walking" then provides the additional framing of possibly a "human"s perspective to that space, lastly, the "the middle" is prompted with hope that the model would somehow render the space with a sequence that starts from the middle and outward.) Then let's say the model gave me a paragraph around 300 to 400 ish characters (60 to 80 words), I would generally have a general and cohesive understanding of the space. However, I would often get objects with next to no descriptions (usually only with the position it is relative to the space) as the residences. From which I would further prompt the model with a new prompt (also human-written) with the intention to get more detailed description of set objects. For example, if my first prompt got me the fist response containing a sentence of "In the center was a large spherical clockwork." I would then revise my prompt into "The spherical clockwork is " to prompt the model to give me more detailed description on the clockwork appeared in the first output.

Rendering the output:

This part will proved to be the hardest, and also where I spent most of my time trying to figure out. The very high level TLDR version of my intention here is to "Remain the flexibility of text but somehow in a form that is tangible to a certain degree."

Prologue:

What is possibly the most interesting aspect for the majority of people about NLPs is the occasional "uncanny" text it has generated. While without proper trainings, I very much drew the parallel from the models out put with poems.

Like the metaphor motifs I mentioned before, I am very interested in the idea of poetic computation, essentially involving computational translations for certain forms of information. And in the case of NLPs, the "uncanny valley" outputs garnered their power from the "hyperreal" feelings of "humaness" juxtaposed with a relative little amount of things that doesn't seem to make sense. And thats what intrigued me the most. Again I drew parallels from literatures, essentially categorizing them as "magical realism". When treating NLP's outputs with enough genuine sensitivity, words would take on the power of reorienting reality.

Using a game engine:

I have chosen to use a game engine, mostly because I look at them as simulations, with abilities to manipulate certain physical properties we can't usually do in real life, or can not easily done. A good example is non-euclidean, showcased by this great youtube video. Things like this (for me at least) seems to be a great translation of something that would only be present in a relative literal settings, where words/texts won't be tasked with the responsibility of portraying context with tangible visuals.

However, I didn't get to figure out the non-euclidean part in time, thus didn't help my crit session on making it into a game environment that's pretty literal.

Still, going back to my TLDR version of my intention:"Remain the flexibility of text but somehow in a form that is tangible to a certain degree." In this case I am looking at story writers like Charlie Kaufman and Sam Beckett (I here acknowledge his inconstancy but will be remiss not mentioning M. Night Shyamalan), both seem to be masters of navigating this realm of magical reality, where they are able to easily identify leverage points to insert their outlandish creativities in to a setting that is achingly mundane, and the tension between the "ground truth reality" and the "elevated ones" are extremely nuanced and precision.

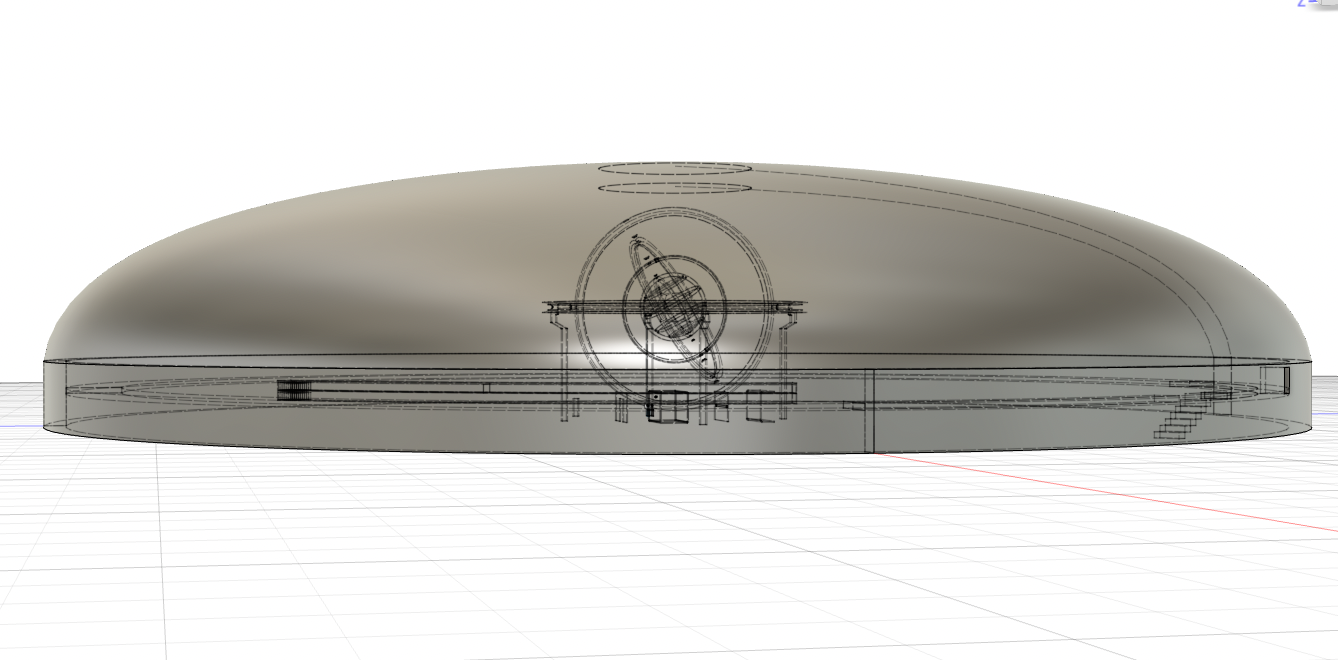

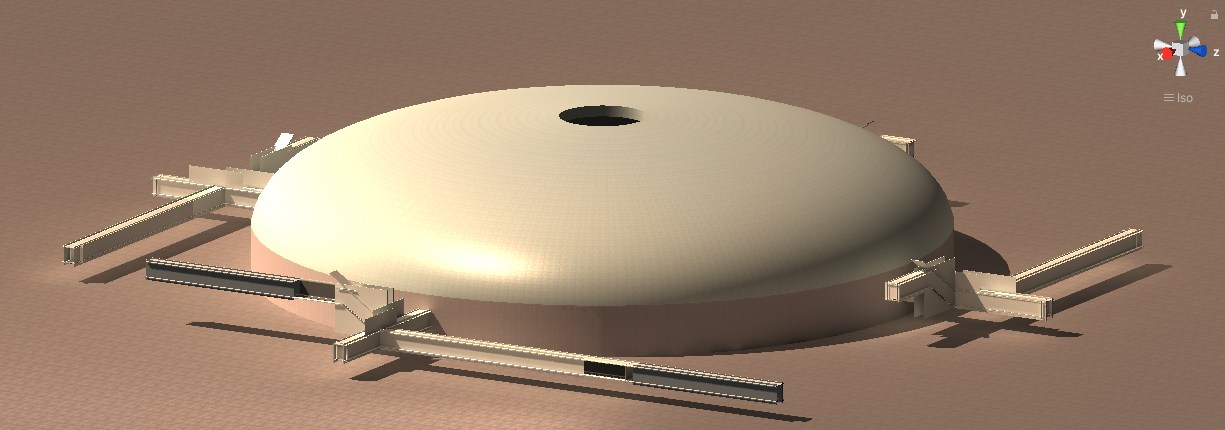

Asset modeling :

After some simple sketches, I moved to fusion360 to model out the virtual space.

Unity production:

For my last stage, I imported the models in to unity and lay them out as stated in the GPT-3 output, added a first person controller including a head bobbing camera to simulate the real life movement, to try and make the experience more immersive.

Below is the final outputs I've chosen as prompt for my space:

Playtest Feedbacks:

I didn't really leave enough time for playtests besides my flatmate, whose action suggested better wayfinding systems around the spawnpoint of scene2, I ended up eliminating the branches to allow only one linear path to the center chamber. Betraying GPT-3's out put for a bit, which could be something to work on later.

Get To all the in-game characters

To all the in-game characters

| Status | Released |

| Author | chooseimage |

More posts

- Upstream PostmortemDec 08, 2021

Leave a comment

Log in with itch.io to leave a comment.